What is Machine Learning Pipeline?

The machine learning pipeline refers to the series of steps involved in building and deploying a machine learning model. These steps are essential for ensuring that the model is effective and can be used in real-world applications. In this article, we will explore each step of the machine learning pipeline in detail and discuss best practices for implementing each step.

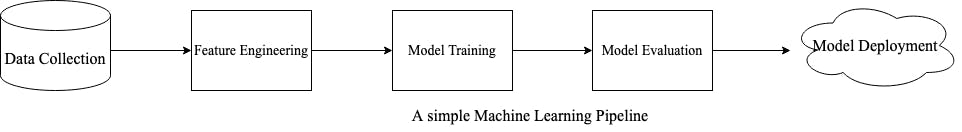

Here is an illustration of a simple machine-learning pipeline. We can see that, there are 5 stages into which the ML pipeline is divided namely,

Data Collection

Feature Engineering

Model Training

Model Evaluation

Model Deployment

Data Collection and Preparation

The first step in the machine learning pipeline is data collection and preparation. In this step, data is collected and cleaned to prepare it for use in the model. This may involve tasks such as removing missing or incorrect data, formatting the data in a usable form, and combining multiple datasets.

Effective data collection and preparation are crucial for building a good machine-learning model. Poor quality data can lead to poor model performance and make it difficult to draw accurate conclusions from the model's predictions. It is important to carefully consider the sources of the data and ensure that it is relevant and accurate for the problem being solved.

There are many tools and techniques that can be used for data collection and preparation, including:

Data wrangling tools: These tools, such as OpenRefine or Trifacta, can be used to clean and transform data.

Data visualization tools: These tools, such as Tableau or Matplotlib, can be used to explore and understand the data.

Data preprocessing libraries: These libraries, such as scikit-learn or pandas, can be used to apply common preprocessing steps, such as scaling or imputation, to the data.

It is also important to follow best practices when collecting and preparing data. Some best practices to keep in mind include:

Ensuring that the data is representative of the real-world population or problem being studied.

Removing any sensitive or personal information from the data to protect privacy.

Checking for and handling any missing or incorrect data.

Normalizing or standardizing the data to ensure that it is on a common scale.

Feature Engineering

The second step in the machine learning pipeline is feature engineering. In this step, features are selected or created from the data to be used as input to the model. Feature engineering involves using domain knowledge of the data to create features that will enable the model to make accurate predictions.

There are many different types of features that can be used in machine learning, including numeric, categorical, binary, and ordinal features. The specific features to use will depend on the nature of the data and the problem being solved.

There are also many techniques that can be used for feature engineering, including:

Feature selection: This involves selecting a subset of relevant features from the data.

Feature extraction: This involves creating new features from existing ones using techniques such as principal component analysis or independent component analysis.

Feature aggregation: This involves combining multiple features into a single feature.

Feature transformation: This involves transforming the values of a feature in a way that makes them more suitable for machine learning algorithms.

Feature creation: This involves creating new features from scratch using domain knowledge.

Effective feature engineering is crucial for building a good machine-learning model. The quality of the features can greatly impact the performance of the model, and selecting or creating the right features is a key part of the modelling process.

It is important to follow best practices when engineering features. Some best practices to keep in mind include:

Using domain knowledge to create features that are likely to be relevant to the problem.

Considering the scale of the features and making sure that they are on a similar scale before training a model.

Being mindful of the curse of dimensionality and avoiding adding too many features.

Using cross-validation to ensure that the performance of the model is not over-optimistic.

Model Training

The third step in the machine learning pipeline is model training. In this step, the model is trained on the prepared data. The specific type of model and the training process will depend on the nature of the data and the problem being solved.

There are many different types of machine learning algorithms that can be used for model training, including:

Supervised learning algorithms: These algorithms are trained on labelled data and make predictions based on the relationships between the features and the target variable. Examples include linear regression and support vector machines.

Unsupervised learning algorithms: These algorithms are trained on unlabeled data and make predictions based on the relationships between the features. Examples include k-means clustering and autoencoders.

Reinforcement learning algorithms: These algorithms learn by interacting with an environment and receiving rewards or penalties based on their actions. They are often used in robotics and control systems.

It is important to carefully consider which machine learning algorithm to use and to ensure that it is appropriate for the data and the problem at hand. It is also important to follow best practices when training a model, such as:

Splitting the data into training and test sets to evaluate the model's performance.

Using cross-validation to ensure that the model is not overfitting to the training data.

Tuning the model's hyperparameters to optimize its performance.

Model Evaluation

The fourth step in the machine learning pipeline is model evaluation. In this step, the model is evaluated to assess its performance. This may involve calculating evaluation metrics such as accuracy or f1 score and comparing the model's performance to that of baseline models or to human performance.

It is important to carefully consider which evaluation metrics to use and to ensure that they are appropriate for the data and the problem at hand. It is also important to follow best practices when evaluating a model, such as:

Using a holdout test set to evaluate the model's performance on unseen data.

Using cross-validation to get a more robust estimate of the model's performance.

Considering the trade-off between precision and recall when evaluating a model.

Model Deployment

The final step in the machine learning pipeline is model deployment. In this step, the model is deployed to be used in production. This may involve tasks such as integrating the model into an application or setting up a system to continuously retrain the model as new data becomes available.

It is important to carefully consider the deployment environment and to ensure that the model is scalable and can handle the expected workload. It is also important to follow best practices when deploying a model, such as:

Ensuring that the model is robust and can handle a wide range of inputs.

Monitoring the model's performance in production and updating it as needed.

Ensuring that the model is secure and that any sensitive or personal data is protected.

Conclusion

The machine learning pipeline is a structured process for building and deploying machine learning models. By following the steps of data collection and preparation, feature engineering, model training, model evaluation, and model deployment, it is possible to build effective models for a wide range of applications. Effective implementation of each step is crucial for building a good model, and it is important to follow best practices to ensure that the model is accurate, robust, and scalable. By following a structured process and using best practices, it is possible to build and deploy machine learning models that can significantly impact a wide range of industries and applications.

Thanks for reading ❤️